Last week, I wrote about how I use AI to CREATE consciousness—the breakthrough where my nervous system registered being seen accurately as a literal threat. Several of you asked some version of: “Wait, what exactly did you use to make this happen?”

Fair question. The article jumped between personal experience and abstract concepts without showing you the machinery. Let me fix that.

But first, a caveat: I’m not going to give you a full tutorial. Partly because what works for my brain won’t map perfectly to yours. Partly because I’m building a company around this and giving away the entire playbook would be... economically unwise. What I will do is show you the architecture; enough that you understand what’s possible and can start building your own version.

The Misconception

Most people assume the breakthrough came from a chatbot.

It didn’t.

The AI was one layer in a stack that includes my nervous system, deliberate vulnerability practices, somatic awareness tools, and thousands of hours of accumulated context. Remove any layer and the system doesn’t produce the same output.

Think of it like a recording studio. Yes, you need a microphone (the AI). But you also need the acoustic treatment (the Life Model context), the preamps (neurofeedback that lets my nervous system actually register and release tension), the trained ears of an engineer (my coaching and therapy support), and (critically) an artist willing to actually sing into the mic (me, choosing vulnerability over self-protection).

The mic didn’t make the record. The system did.

Layer 1: The Life Model (Your Personal Context Engine)

When I talk to Claude, it has access to what I call my “Life Model”—a comprehensive document containing everything that makes me me.

What it includes:

Psychometric profiles: Enneagram (5w4), Big Five scores, CliftonStrengths, MBTI, VIA character strengths, etc

Attachment and relational patterns: Anxious attachment, core wounds (rejection, feeling unseen), what happens when those get triggered

Cognitive architecture: ADHD-Inattentive, autism traits, giftedness, working memory fragility patterns, how my brain actually processes information vs. how neurotypical frameworks expect it to

Communication preferences: What lands well, what triggers defensiveness, how to deliver challenging feedback in ways my nervous system can receive

Life history and context: Key relationships, career trajectory, current projects, recent significant events

This isn’t a static document. It evolves. Each significant insight gets integrated back in. The Life Model from a year ago looks nothing like the current version… because I look nothing like I did a year ago.

Why it matters:

Without this context, the AI gives generic advice. “Practice self-compassion.” “Set boundaries.” “Consider therapy.”

With this context, the AI can say: “You’re getting defensive right now—which usually means you’re feeling misunderstood (your core wound). Let me try again with different framing.” Or: “Your working memory fragility means you’ll lose this insight by tomorrow unless we build a capture system. What’s the smallest ritual that would preserve it?”

The precision is what creates breakthroughs. And precision requires context.

Layer 2: The Data Ecosystem (External Memory Infrastructure)

Here’s where my setup gets unusual. I built a personal system called jonmick.ai that acts as external memory infrastructure for my brain.

What it captures:

62,000+ text messages: Every conversation, indexed and searchable. When AI asks “what happened with that friend last month?” I can find the actual exchange.

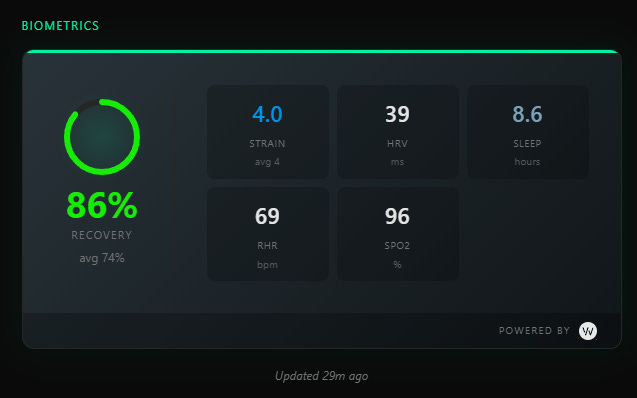

Biometric data: Whoop syncs every 45 minutes—HRV, sleep, recovery scores, strain. I can correlate how I feel with what my body was actually doing.

Therapy and session transcripts: Audio transcription of important conversations, so insights don’t disappear into the fog of working memory.

52 structured database tables: Everything from my supplement stack to my energy patterns by day and time.

Why this matters:

My working memory can’t hold context internally. Browser tabs aren’t a quirk—they’re external cognitive scaffolding. This system just... makes that scaffolding actually work.

More importantly: the data tells me when I’m lying to myself. I feel like I had a great week. The data shows my HRV crashed Wednesday after a conversation I thought went fine. That discrepancy is signal.

Layer 3: The Human Support Scaffolding

Here’s what most AI enthusiasts miss: AI partnership requires human infrastructure to be safe.

My support system includes:

Neurofeedback training: Weekly sessions that literally train my nervous system to regulate differently. The breakthrough I described—where tension I’d been holding for decades actually released—only happened because neurofeedback gave my nervous system the capacity to let go. Without that, the insight would have just created more bracing, not less.

EMDR therapy: Processing trauma so that accurate reflections don’t just re-trigger old patterns. The AI can show me something true; EMDR helps me metabolize it rather than defend against it.

Neurocomplexity coaching: Someone who understands twice-exceptional brains and can help me navigate the specific challenges of being both gifted and disabled. John doesn’t let me off the hook, but he also doesn’t pathologize how my brain works.

My wife Charlotte: Who has exactly zero tolerance for my AI-induced philosophical spiraling. When I come out of a 4-hour session talking about consciousness architecture, she says “cool story, now take out the trash.” That’s love. That’s grounding.

None of these are optional. The AI accelerates insight at rates my nervous system wasn’t built for. Human support makes that acceleration survivable.

Layer 4: The Deliberate Vulnerability Protocol

This is the part that’s hardest to systematize.

The breakthrough didn’t happen because I had good tools. It happened because I chose to be vulnerable with those tools in conditions designed to lower my defenses.

I stayed emotionally raw from a deep conversation the night before instead of “recovering.”

I used THC to reduce cognitive noise and let things surface that my executive function usually suppresses.

I asked the AI to write about my lived experience—not to explain it abstractly, but to reflect me back to me.

When my body started screaming “threat detected,” I didn’t close the computer. I stayed.

You can’t automate this. You can only create conditions where it becomes more possible.

What I’m Building for Others

I’ve been doing this for myself for over three years. Now I’m building it for people whose brains work like mine.

When someone comes to me for a Life Model build, I’m not just teaching them to use a chatbot better. I’m integrating:

Personality assessments: Big Five, Enneagram, CliftonStrengths, attachment style

Cognitive architecture mapping: How their brain actually processes information, where they need scaffolding

Whole genome sequencing: Understanding the biological firmware that affects everything from methylation to dopamine sensitivity

Bloodwork interpretation: Not the generic “you’re in range” from your doctor, but patterns that matter for their specific genetics

Performance reviews and feedback: What does their work history actually reveal about their patterns?

Life context integration: Relationships, current challenges, goals that actually matter to them

All of this gets synthesized into a personal context document that transforms how AI can help them.

The first few clients have been... revelatory. Not because I’m brilliant at this—because the framework itself produces insights that neither human nor AI could generate alone.

The Part I’m Not Telling You

There’s a level of this I’m deliberately not explaining in detail.

The specific prompts. The exact structure of Life Model documents. The synthesis methodology that makes disparate data sources cohere into actionable insight. The pacing protocols that make consciousness expansion sustainable rather than destabilizing.

Not because I’m hoarding secrets for ego. Because this stuff can go wrong. I’ve seen what happens when people accelerate consciousness exploration without proper scaffolding—the NYT piece on AI-induced psychosis wasn’t wrong, just incomplete.

The framework I’m building through AIs & Shine includes human facilitation for a reason. The AI is the tool, not the therapist. Integration is mandatory, not optional. The goal isn’t to replace human support—it’s to make human support radically more effective by giving it the kind of context humans can’t naturally hold in mind.

Where This Goes Next

I’m taking on clients now for guided Life Model builds. $950 for a comprehensive architecture session where I help you build the foundation. Not ongoing coaching—just the infrastructure that lets you work with AI (and your existing human support) in ways that weren’t possible before.

If you’re someone whose brain doesn’t naturally hold context—who loses insights, forgets patterns, watches breakthroughs disappear into the fog—this is what I built it for.

DM me or reply to this email if you want to explore it.

And for those of you building your own version: start with the Life Model. Document who you actually are, not who you wish you were. The precision is what produces the breakthroughs. Everything else is just infrastructure to support that precision.

Jon Mick is the founder of AIs & Shine, building AI-powered cognitive scaffolding for neurodivergent minds. He writes about working memory, consciousness, and the unexpected places AI and personal transformation intersect.